Persuasion in Parallel: Alexander Coppock’s New Book Argues in Favor of Effective Arguing

His name is Alexander Coppock, and he approves this message.

For Coppock, an associate professor of political science and a faculty fellow with the Institution for Social and Policy Studies (ISPS), it’s a simple message about a complicated concept. One with significant consequences for our political system, the influence of ads, and how we relate to one another outside of election campaigns.

The message is this: Even though Americans are deeply divided on many issues and often view the other side as incapable of learning from new information or changing their minds, new information in favor of an opposing position does not — as previously thought — cause people to double down on their preexisting positions. On the contrary, information intended to persuade for or against a policy or a candidate causes people to update their positions in the direction of that intention, at least a little bit.

In fact, Coppock’s research shows that people from different groups respond to information in the same direction and the same amount, regardless of their age, gender, race, ethnicity, political party affiliation, or the positions they held originally. He calls this aligned dynamic “Persuasion in Parallel,” which is the title of his new book, soon to be published by The University of Chicago Press.

We recently spoke with Coppock about his book, our polarized society, and what his update of political science theory could mean for how we think about how we think.

ISPS: If your book has an antagonist, it is the theory of “motivated reasoning.” What is motivated reasoning, as understood by previous academic research?

Alexander Coppock: Motivated reasoning is rooted in the idea that people don’t derive rational conclusions from the information they get, they reason in a motivated way toward an attitude that they want to hold. Motivated reasoning is when you just don’t want to hear an argument against your position. When you do get that information, you twist it to your own purposes, so you feel you are even more right than when you started.

ISPS: What is the supposed mechanism behind this theory?

AC: The idea is that people hold two basic kinds of goals when confronted with new information. The first is accuracy. They want to get it right. That’s not a problem for democracy. But the theory argues that the second goals are directional, and their influence could be a problem. These goals represent the attitudes you want to wind up holding. So, if I give you a piece of information that challenges your worldview, motivational reasoning’s directional goals will guide you to counter-argue, think about all the reasons this new information is wrong, and make you believe even more strongly in your previous position. Under this view, it is counterproductive to try to change people’s minds. In the scientific literature, this is called backlash.

ISPS: In your book, you argue that this is completely wrong, and backlash does not generally exist. What led you to investigate this theory?

AC: In graduate school, I read an important, regularly cited study from 1979 on motivated reasoning with my collaborator Andrew Guess. We suspected that the research design contained two significant weaknesses regarding a biased way for measuring the attitude change and a failure to assign participants randomly into experimental and control groups. We replicated the study with a much larger, more diverse sample, randomized the treatments, and reached the exact opposite conclusion.

ISPS: You found that new information doesn’t lead people to distort it to further entrench their preexisting views but instead persuades them in the direction of the evidence. And this is also true for people on the other side of an issue receiving information against their preexisting views. Both groups decrease or increase their support by a similar amount. This is what you call “persuasion in parallel.”

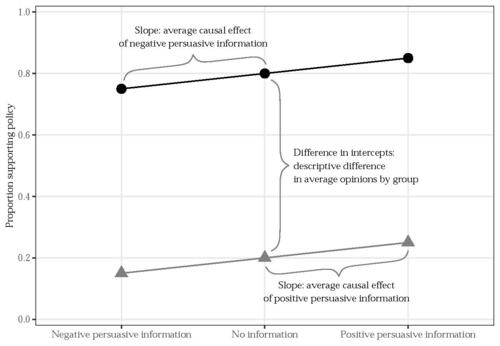

AC: Right. And the number one thing I want people to understand is that this pattern is really commonplace. The book presents many examples of newly reanalyzed research. This is what happens almost every single time I’ve ever looked. And in a basic representative chart, the pattern looks like this:

ISPS: Why do you think this has gone unnoticed for decades?

AC: One reason is that we don’t often see this kind of picture. Literally. Because it is a non-result. Usually, researchers are trying to find ways in which some variable affects groups differently, and then they report those differences. But, as with the circles and triangles in the sample chart, when researchers don’t discover differences — when the experimental treatment causes the same effect, of the same size, in the same direction — it usually goes unreported. The hypothesis under investigation didn’t work out, so they move on to something else. This other finding remains hidden.

ISPS: What is a real-world example of how persuasion in parallel operates?

AC: Let’s say somebody writes an op-ed in favor of the United States supporting Ukraine. Persuasion in parallel tells us that this opinion piece will move people a little bit in the direction of supporting Ukraine regardless of a reader’s starting position. The same would be true of an op-ed arguing against U.S. support of Ukraine. People would move a small amount in the direction of not supporting Ukraine regardless of what they thought before reading it. And this is true about many topics. If an advocacy group runs a TV ad that uses scary music and shares information about how fracking is bad for the environment, this makes people like fracking less.

ISPS: You aren’t arguing that persuasive information causes people to abandon their preexisting beliefs, only that it causes them to move their opinions in the direction of the new information “a little bit.” What do you generally mean by “a little bit” in this context?

AC: In experiments I’ve conducted and reviewed, we are most often talking about policy attitudes. Very roughly, persuasive information causes a five-percentage point effect right after presentation of the information. People might move five points more or less in favor of the policy under discussion. Again, this effect depends on the intended persuasive direction of the information and not on an individual’s preexisting position or any other aspect of their identity. It’s important to note that this effect was even smaller when trying to influence something like presidential vote choice. The effect measured in one meta study of presidential ads on support of the candidates was closer to 0.7 or 0.8 percentage points.

ISPS: Why the smaller effect?

AC: There are some theories on why some attitudes are more malleable than others. Often it appears to depend on how much information you have on candidates at the time. It’s hard to influence someone’s overall opinion with new information when people already know so much.

ISPS: Do these persuasive effects last?

AC: The effects I’ve measured appear to last for a time. They go down after 10 days, though not all the way to zero.

ISPS: Any idea why?

AC: There is only one little shred of information about why. I think that what happens, and this is speculation, is when you get a new piece of information, you are told that it’s important. The information arrives with some salience. So, the immediate response is a response to the information plus some sense it’s important that you consider it. I think the salience dissipates faster than the information itself. I don’t know that for sure. Recency bias — simply newer information taking priority over older information and then being supplanted by even newer information — could also explain this decay over time.

ISPS: Don’t people also react differently to information based on their group affiliations?

AC: Absolutely. When investigating the power to persuade, there are two kinds of experimental treatments. The first involves facts and arguments — persuasive information. The second is a group cue. This involves information about which groups in society support which positions. Someone seeking to deploy group cues to influence public opinion is not trying to persuade you on the substance of an issue but just trying to clue you in to what other people think.

ISPS: Is that effective?

AC: Group cues are typically more potent than information-only treatments. People are strongly influenced by solidarity with the groups they identify with. But group cues have different effects depending on which group you are in. For example, imagine an ad by Democrats in support of Policy X that says, “Democrats support Policy X.” This would lead people who identify with Democrats to increase their support of Policy X. But people who do not identify as Democrats would be less likely to support Policy X.

ISPS: This is different from what you would expect from persuasion in parallel, right?

AC: Correct. When appealing to people only with persuasive information — facts and arguments — both Democrats and non-Democrats would be moved a little in the direction of that information. That’s persuasion in parallel. But group cues do not work this way. If you are trying to persuade broadly, do not use group cues. You will turn a segment of the audience against that message.

ISPS: Given the zero-sum nature of electoral politics and the wide gulfs separating Americans on many core issues — from abortion to immigration to taxes to guns — do you find this dynamic to be hopeful? Is a little bit ever enough? After all, parallel lines never intersect.

AC: The acceptance of motivated reasoning made it seem pointless, counterproductive to even attempt changing minds with information. The idea was that reasoning is broken. You can’t persuade people because they are just so addled by partisanship. But we now know that’s not true. The baseline norm is persuasion in parallel, which represents a major corrective in our understanding of the world. If I were to advise a political party — which is something I do not do — it would be to try to persuade people who are on the other side. Because it does work.

ISPS: But there is a cost, right? Particularly on the individual level?

AC: Absolutely. There is some subtlety here, because we need to consider two different outcomes. One is influencing someone’s attitude toward a particular policy. The other outcome is affective. How does the person being influenced feel afterward? How does that person feel about me, the influencer? It is true that people do not like being persuaded. They dislike the messenger. They want to stop listening. They argue back. That’s a side effect of persuasion.

ISPS: Isn’t that a supposed source of backlash, which you have said does not generally exist?

AC: Yes, but the affective side effect does not short-circuit the overall effect of persuasive information. In the absence of group cues, people generally change their minds a little bit in the direction of the information. And they also get angry. It’s both.

ISPS: What can be done about that? That doesn’t sound very encouraging.

AC: Well, I think the message is that we can’t give up. If you give up, you lose. I know it seems like the bulk of information we lob at one another in political communications makes everyone angry all the time. Like all we are doing is turning the volume up. But we cannot stand down. Because persuasive information is effective, you cannot just exit from the information exchange. We have to keep campaigning, knocking on doors, buying TV ads. This is the lesson of “Persuasion in Parallel.” Especially in your own life, you can, in fact, persuade people who disagree with you. Try harder. Understand there can be some interpersonal cost. Don’t use group cues. Use information.