AI Accelerates Research Across Social Sciences: Insights from Yale’s DISSC

José-Antonio Espín-Sánchez found the collection of 15 books in a library of the Instituto Hispano Cubano, in the Spanish city of Seville.

An associate professor of economics, Espín-Sánchez has been collecting data on all immigrants from Spain to the Americas since the time of Christopher Columbus and linking their family trees. One 16th century collection he sought contained contracts with ship captains and passenger listings. Yale only had the 10th volume, and Harvard none. The collection had never before been scanned. No intelligence — artificial or otherwise — had likely attempted to examine the text and extract names, dates, and relationships for large-scale analysis.

With the help of ChatGPT, a generative artificial intelligence large language model (LLM), Espín-Sánchez reduced the time needed to transcribe and process each record from hours to minutes, potentially reducing the overall project time from decades to years.

“The idea is that when we have the whole dataset, we can create family trees for the last 500 years,” he said.

But with this new and rapidly evolving technology, researchers must take great care to ensure accuracy and overcome obstacles. At a recent collaborative session sponsored by Yale’s new Data-Intensive Social Science Center (DISSC), the Institution for Social and Policy Studies (ISPS), and the Institute for the Foundations of Data Science (FDS), Espín-Sánchez joined four other social scientists and a room of researchers to share what they are learning about how AI can help them learn.

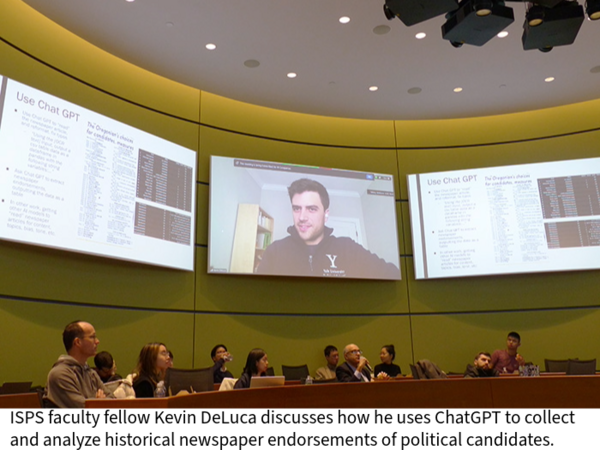

Kevin DeLuca, an ISPS faculty fellow and assistant professor of political science, discussed how he uses ChatGPT to collect and analyze historical newspaper endorsements of political candidates. The AI can help fix errors from more established optical character recognition (OCR) applications and place the data into a structured format, significantly reducing the time and effort required to manually transcribe text from newspaper archives and organize data to more easily answer research questions.

“It spits out a table usually within a couple of seconds, which is pretty great,” DeLuca said.

As with Espín-Sánchez’s examination of scanned documents, the AI can struggle with accuracy, often due to OCR errors or formatting issues. For example, scanning old bound books face down often introduces a curve to the lines of text because of how the pages are attached to the spine. And the software can lose its place when following a curve from one line to another across the page, jumping around, breaking up names, and changing the order of the words.

But the researchers have found effective ways to mitigate these problems. And they have begun evaluating the different available AI tools to fine-tune the best approaches for their tasks.

Emma Zang, an ISPS faculty fellow and assistant professor of sociology, biostatistics, and global affairs, used AI to decode 1.5 million legal judgments in China to study the effect of a 2011 change in law on gender inequality in divorce cases. The AI extracted and processed vast amounts of data from 2009 to 2016 — written in Chinese and involving gender, child custody decisions, and housing property disputes — that would have been impractical to analyze manually.

After testing several methods and various AI platforms, Zang and her team found that a fine-tuned ChatGPT-4o mini analysis produced accuracies greater than 90% for the data she sought and reduced the project time from years to months.

Tom McCoy, an assistant professor of linguistics, used AI to simulate language acquisition in children to better understand how children learn language rules and structures. The AI provided a way to study a process that is difficult to observe directly, and the ability to modify AI training data and algorithms allowed for controlled experiments that would be impractical or unethical with human subjects.

The AI models sometimes failed to capture the nuances of human language learning, leading to less accurate simulations. And the reliance on textual data limited the researchers’ ability to fully replicate the multiple types of input involved when humans acquire language. But McCoy said the technology held tremendous potential for his field.

“This shows how recent advances in AI are enabling us to get at some of these really classic puzzles about how humans are able to learn language in all of its richness,” he said.

Balázs Kovács, an ISPS faculty fellow and professor of organizational behavior, used AI to measure if the way concepts are stored by ChatGPT are similar to how humans store concepts. He and his co-authors studied how well text documents match specific concepts, like book genres or tweets typical of one political party. They found that ChatGPT can achieve high results without the extensive data training required by previous methods, indicating the technology’s potential for advanced text analysis in social science.

In addition, Kovács and his colleagues demonstrated that humans could not distinguish between online restaurant reviews produced either by humans or ChatGPT. In fact, AI detectors could not correctly identify human-written reviews from AI.

“There’s a lot of implications for these online reviews,” Kovacs said, suggesting trust will decline, manipulation could increase, and regulatory measures and shifts in marketing strategies might be needed. “Review websites need to figure out what to do with this, because they’re going to be in big trouble. If people can’t believe what they read, they’re not going to read them, and they are going to go out of business.”

DISSC Director Ron Borzekowski considered the event “the beginning of an ongoing conversation on this important topic.”

“At the Data-Intensive Social Science Center, we’re thinking about AI as a tool, as an input,” he said. “Of course, there are other issues about societal impacts and what this will do to the world at large, but for now our focus is what this will do to our own profession of producing research.”

The DISSC event was just one of the many campus-wide efforts to address how faculty, students, and staff engage with AI. In August, the university announced a five-year, $150 million investment to support developing, using, and evaluating AI in response to a faculty task force’s recommendations.

“I thought the DISSC event was an excellent example of the task force’s recommendation to bring people together from different corners of the university,” said Jennifer Frederick, associate provost for academic initiatives and executive director of the Poorvu Center for Teaching and Learning. “We need to continue to raise the visibility of this work and propel people in different disciplines to share their experiences and generate new ideas.”

Frederick, who helps lead efforts to implement and mobilize AI investments across campus, said she will be hiring a program manager in the new year to amplify and coordinate the growing number of AI-related events. In the spring, DISSC, ISPS, and the Tobin Center will host two events featuring economist Seth Stephens-Davidowitz, who wrote a data-heavy book on basketball written in 30 days using ChatGPT’s analysis tools. On May 9, Yale will host a campus-wide research symposium to showcase AI scholarship.

“I think we are ahead of many peer institutions when it comes to AI research and infrastructure,” Frederick said. “We have a cohesive strategy based on recommendations from a task force representing the entire campus that we are now implementing with a five-year budget. We are taking this opportunity and challenge seriously so we can continue to recruit and retain excellent faculty and students and stay in a position of leadership with AI.”